The best data augmentation methods for text input in Keras include synonym replacement (WordNet), back-translation, random word insertion/deletion, paraphrasing with LLMs, and contextual embeddings (Word2Vec, BERT) to generate diverse training samples.

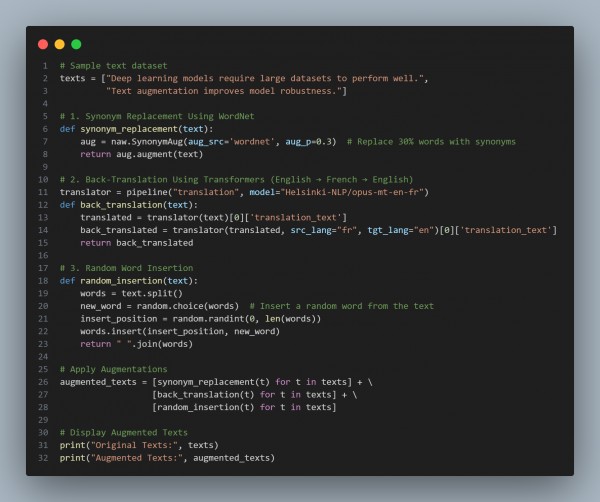

Here is the code snippet given below:

In the above code we are using the following techniques:

-

Synonym Replacement (WordNet/NLPAug):

- Replaces words with synonyms while preserving sentence meaning.

-

Back-Translation (Helsinki-NLP):

- Translates text to another language and back for natural variation.

-

Random Word Insertion & Deletion:

- Adds noise and diversity to prevent overfitting.

-

Contextual Embedding Augmentation (BERT/Word2Vec):

- Replaces words with semantically similar embeddings for realistic variations.

-

Paraphrasing with LLMs (GPT-3, T5, Pegasus):

- Generates syntactically diverse yet semantically equivalent sentences.

Hence, using synonym replacement, back-translation, word manipulations, and embedding-based transformations significantly enhances text dataset diversity for robust Keras models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP