To fix tuning issues with batch normalization (BN) layers in GANs, you can follow the following steps:

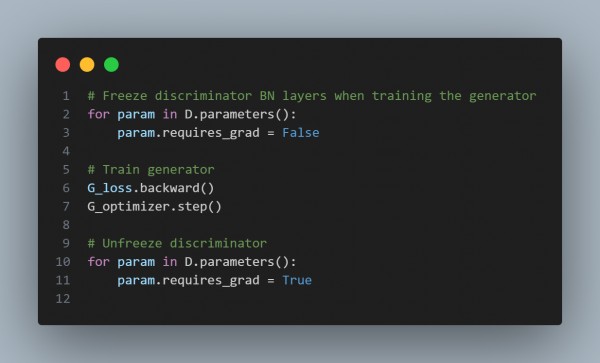

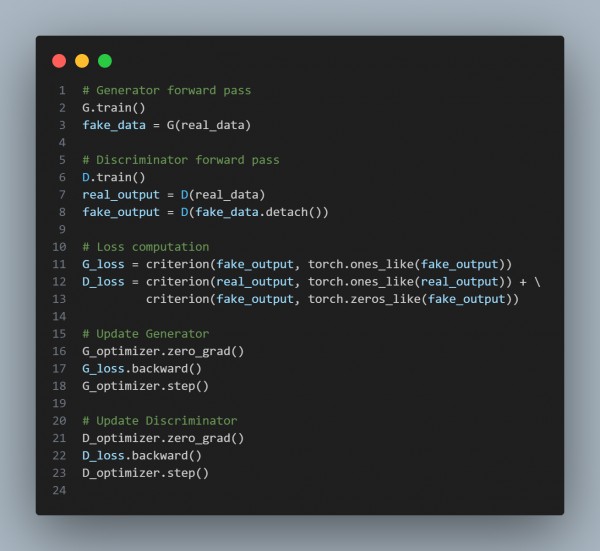

- Train Generators and Discriminators Separately

- Ensure BN layers in the generator and discriminator are tuned independently.

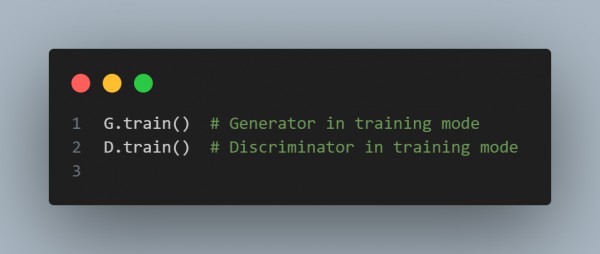

- Use Different Modes for BN

- Set model.train() explicitly for training and model.eval() for evaluation.

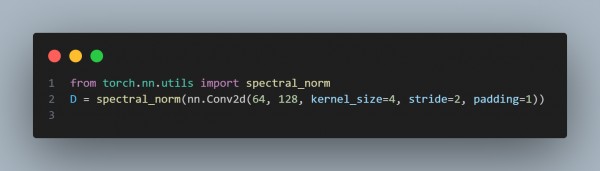

- Avoid BN in Discriminator

- Replace BN with other normalization techniques like spectral normalization for stability.

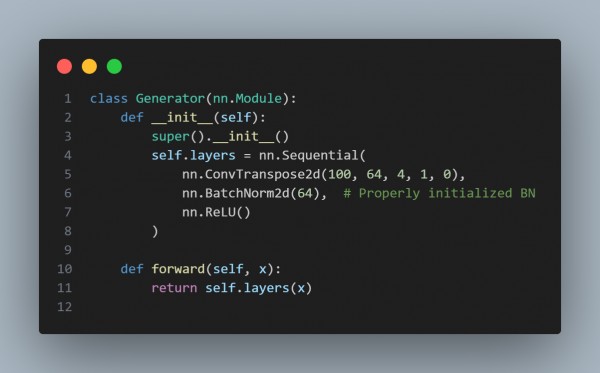

- Track Running Statistics Correctly

- Ensure BN running statistics are updated during training.

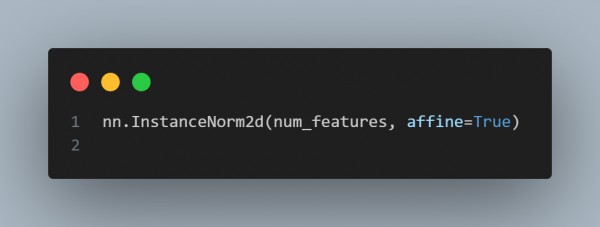

- Use Instance Normalization

- For better generalization, replace BN with instance normalization in the generator.

Here are the code snippets you can refer to explaining the following steps:

Hence, By properly managing normalization layers, you can improve training stability and model performance in GANs.

Related Post: How to implement batch normalization for stability when training GANs

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP