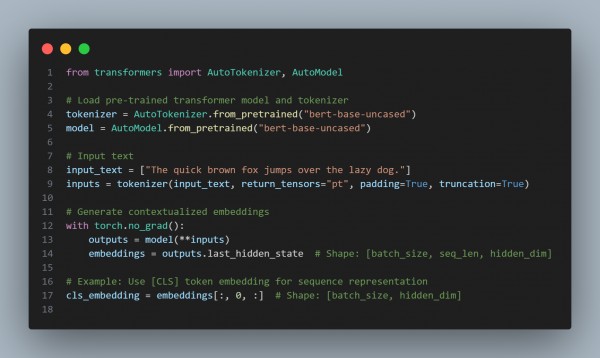

To use transformer encoders to generate contextualized embeddings for input sequences in text generation, you pass the input sequence through a pre-trained transformer model (e.g., BERT, GPT) and extract the hidden states. Here is the code you can refer to:

In the above code, we are using the following:

-

Token Embeddings:

- Each token in the sequence has a contextualized embedding capturing its meaning in context.

-

Sequence Embedding:

- Use the [CLS] token embedding (index 0) for overall sequence representation.

-

Fine-Tuning:

- You can fine-tune the transformer for better results when you do your text generation task.

-

Applications:

- Use embeddings as inputs to downstream models (e.g., for sequence generation).

Hence, you can use transformer encoders to generate contextualized embeddings for input sequences in text generation.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP