To resolve out-of-vocabulary (OOV) token issues in Hugging Face tokenizers, use a tokenizer that supports subword tokenization (e.g., Byte-Pair Encoding or WordPiece). Alternatively, you can add new tokens to the vocabulary.

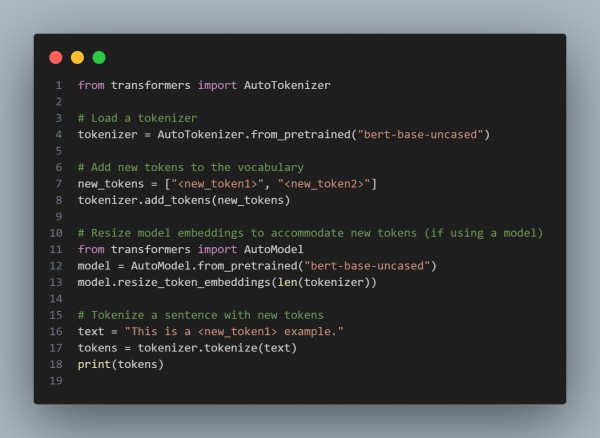

Here is the code example you refer to:

In the above code, we are using the following approaches:

- Subword Tokenization: Handles OOV words by breaking them into smaller subwords or characters.

- Adding Tokens: Extends the vocabulary with specific new tokens to handle domain-specific or custom vocabulary.

- Resizing Embeddings: Ensures the model can use the extended vocabulary during training or inference.

Hence, by referring to the above, you can resolve out-of-vocabulary token issues in Hugging Face tokenizers

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP