To troubleshoot slow training speeds when using mixed-precision training, you can follow the following steps:

- Ensure Proper Use of torch.cuda.amp

- Verify that mixed-precision is applied correctly with torch.cuda.amp.autocast() and GradScaler.

- Monitor GPU Utilization

- Check if the GPU is underutilized using nvidia-smi. Ensure high utilization (~90–100%).

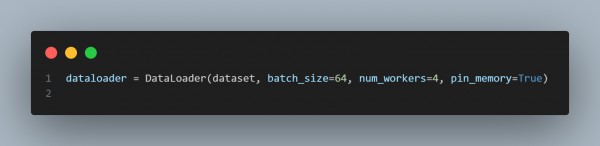

- Reduce Data Loading Bottlenecks

- Optimize the dataloader by increasing the number of workers and using pin_memory.

- Check Batch Size

- Increase the batch size to maximize GPU memory usage

- Verify Tensor Operations

- Ensure all operations are on GPU for optimal performance. Avoid CPU-GPU data transfers.

- Check Mixed-Precision Compatibility

- Verify if unsupported operations are causing slowdowns. Use torch.backends.cudnn.benchmark for optimization.

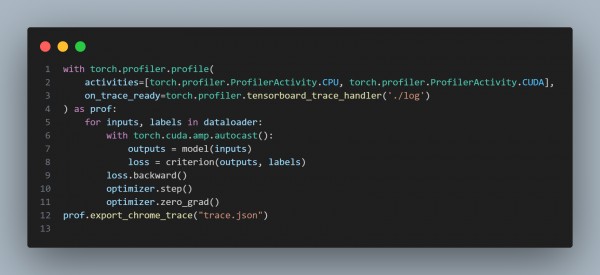

- Profile Training Steps

- Use PyTorch’s profiler to identify bottlenecks.

- Update GPU Drivers and Libraries

- Ensure the latest CUDA, cuDNN, and PyTorch versions are installed.

Here is the code snippet you can refer to:

Hence, By systematically addressing these factors, you can troubleshoot slow training speeds in mixed-precision training.

.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP