You can integrate PyTorch's torch.utils.checkpoint for memory-efficient training by recomputing intermediate activations during the backward pass instead of storing them in memory.

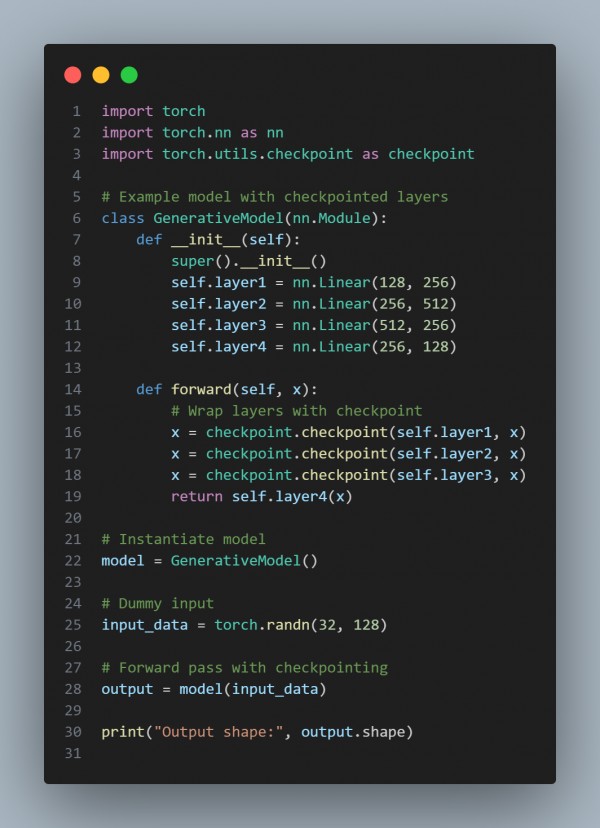

Here is the code example you can refer to:

In the above code, we are using the following approaches:

- torch.utils.checkpoint: Wrap memory-intensive layers for recomputation during backpropagation.

- Trade-off: Saves memory at the cost of additional computation during training.

- Usage: Ideal for deep generative models with limited GPU memory.

Hence, by referring to the above, you can integrate PyTorch's torch utils checkpoint for memory-efficient training of generative models.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP