You can monitor gradient sparsity in QLoRA training by counting zero elements in gradients during on_after_backward hook in PyTorch Lightning.

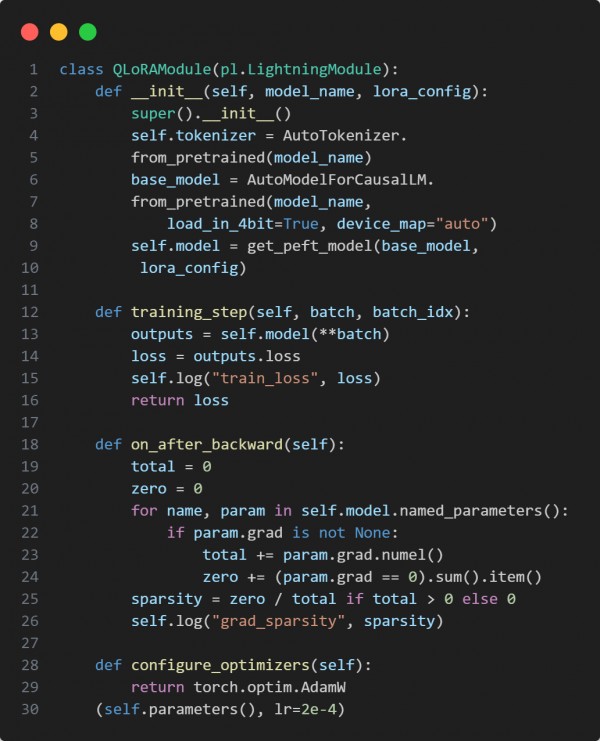

Here is the code snippet you can refer to:

In the above code we are using the following key strategies:

-

Uses on_after_backward to access gradients post-backward pass.

-

Computes sparsity as ratio of zero-valued gradient elements.

-

Logs grad_sparsity per training step for analysis.

Hence, gradient sparsity tracking in QLoRA provides insights into parameter efficiency and optimization dynamics during training.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP