To save and resume GAN training in PyTorch, you can use model checkpoints to store the generator, discriminator, and optimizer states.

Here are the steps given below which you can follow:

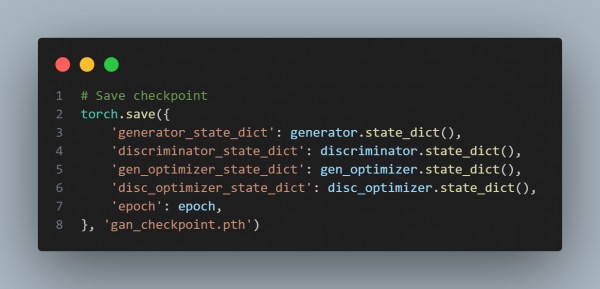

- Saving Checkpoints

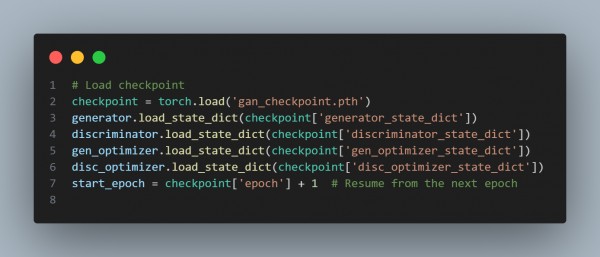

- Loading Checkpoints

In the above steps, we are using the following steps:

- Save States: Use state_dict() for models and optimizers.

- Resume Training: Load states into models and optimizers and resume from the saved epoch.

- Ensure Device Compatibility: Use .to(device) if loading models on a different device.

Hence, by referring to the above, you can utilize model checkpoints in PyTorch to save and resume GAN training.

REGISTER FOR FREE WEBINAR

X

REGISTER FOR FREE WEBINAR

X

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP

Thank you for registering

Join Edureka Meetup community for 100+ Free Webinars each month

JOIN MEETUP GROUP