Agentic AI Certification Training Course

- 128k Enrolled Learners

- Weekend/Weekday

- Live Class

A global online store like Amazon uses robots that are run by AI to help customers. People often want to know what’s going on with their orders. Without quick programming, the bot could get it wrong and give you useless information.

A Bad Example of a Prompt:

“Where is my order?” asked the customer.

“Please give your order number,” said the AI.

As an example of a better prompt (thanks to prompt engineering), here is one:

Folks: “What’s the status of my order #12345?”

AI Says: “Your order #12345 is currently out for delivery and will arrive tomorrow.”

As a result, prompt engineering has made it possible for AI to correctly understand and react, making customers happier by giving them quick, useful answers. This can cut down on customer service questions by a large amount and make operations run more smoothly.

These days, it’s common to hear the expression “prompt engineering,” which sends us into a mental rabbit hole. Prompt engineering: what is it? How does it work? And what are its advantages? Recent surveys highlight its importance. For instance, in customer support, a well-structured prompt can improve response accuracy. In data analysis, tailored prompts can extract specific insights. The art of prompt engineering empowers users to harness AI’s potential more effectively across various domains.

How you tell it what to do is like giving it a “prompt.” Now, let’s break this down with a simple example.

Example: Asking for a Weather Update

Result: The robot might reply, “Where?” It needs more details.

Result: Now the robot knows where you’re interested, but it might ask, “Today or tomorrow?”

Result: Now the robot knows exactly what you want. No more questions, no confusion.

Prompt engineering plays a pivotal role in optimizing AI model interactions. Its core ideologies revolve around crafting well-structured and context-rich instructions. Recent data shows that effectively engineered prompts can significantly enhance AI model performance. By providing clear and tailored input, users can improve the accuracy and relevance of AI-generated outputs, whether in customer support, data analysis, or creative content generation. This approach ensures that AI systems fulfill their intended purpose more efficiently, making prompt engineering a critical practice in maximizing the value of AI technologies.

Imagine if you asked for tomorrow’s weather and the robot told you about today. That wouldn’t be very helpful, right? So, prompt engineering helps us refine our questions, making sure the robot understands and gives us the right answers. In everyday terms, it’s like learning to ask questions in a way that leaves no room for misunderstanding. We’re basically making our communication with machines smoother and more effective.

Remember, the better we get at prompt engineering, the more accurate and helpful our robot assistants become! Watch this video to gain in-depth knowledge of prompt engineering if you’re interested in learning it in detail.

To help you better understand what prompt engineering is and how you would design a prompt using a text and image model, here are some examples.

Best practices and advice for generating prompts:

Advice: Clearly articulate what you want from the AI. Ambiguous prompts can lead to misunderstood or irrelevant responses.

Example: Instead of saying “weather,” specify “What is the weather forecast for Delhi today?”

Advice: Add relevant details to your prompt to give context. This helps the AI understand your request more accurately.

Example: Instead of “restaurants,” say “recommend vegetarian-friendly restaurants in Bangalore.”

Advice: Frame prompts in a conversational manner. Avoid overly technical language to enhance understanding.

Example: Instead of “search query,” phrase it like “Can you find information on sustainable energy practices?”

Advice: If the initial response is not what you expected, refine your prompt. Experiment with different wording until you get the desired outcome.

Example: Instead of “show images,” try “display pictures of modern architecture.”

Advice: When using system prompts, carefully review and edit them. Adjusting the tone or adding specifics can significantly improve results.

Example: Instead of the generic “Translate the following English text,” provide the actual text you want translated.

Remember, the effectiveness of prompt engineering relies on the clarity and precision of your instructions. By following these tips, you can enhance your interactions with AI systems, ensuring more accurate and valuable responses.

The main goal of prompt engineering is to make inputs (prompts) that elicit the best outcomes from a language model. It may look like simple trial and error, but the method is built on a deep understanding of how these models read and write text.

To engineer effective prompts, it’s essential to understand how these language models work at a technical level

Engineers who work with prompts need to know how transformer models function. These models don’t “think” like people do; they guess what the next token will be based on what the previous ones were. This means that where you put the information, how you say it, and even small adjustments in tone can all make a big difference in the outcome.

For example, compare these two prompts:

The second request is clearer and gives structural instructions, which leads to more predictable and useful results.

With a grasp on model behavior, the next step is to explore the actual techniques that shape how prompts are framed.

Zero-shot, Few-shot, and Chain-of-Thought are all ways to prompt someone.

Different techniques can guide how the model responds:

Zero-shot prompting gives the model a task without any examples.

Example: “Translate this sentence to Spanish: ‘Good morning.’”

Few-shot prompting includes examples to teach the model how to behave.

Example:

Q: What is the capital of France?

Beyond technique, prompt engineers must also be mindful of technical constraints that affect prompt design.

These methods help customize outputs, especially in complicated situations like reasoning or conversations that go on for more than one turn.

Prompt engineering is not something you do once. Professionals evaluate different versions of prompts, check to see if the result is consistent, and then make changes based on what they learn. OpenAI Playground, LangChain, and PromptLayer are some of the best tools for testing and trying out prompts on a large scale.

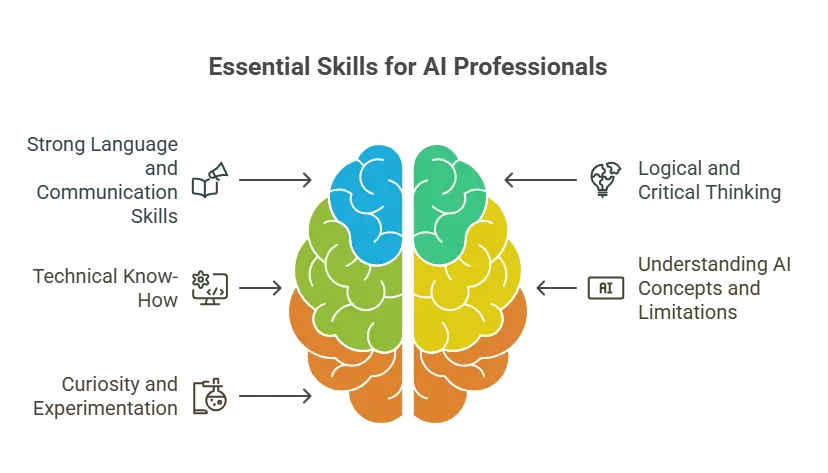

All of this technical work relies on a diverse set of human skills — let’s look at what makes an effective prompt engineer.

Prompt engineering combines creativity, logic, and technology. As a result, the skill set required is both wide and dynamic.

Since prompts are written in everyday language, it’s important to be able to write clearly and simply. Good prompt engineers think like UX writers, making sure that the instructions are clear, accurate, and follow the model’s expectations.

For example, rather than saying:

“Write about AI in healthcare.”

This prompt is too broad, which can result in surface-level information.

“Write a 600-word article on how generative AI is being used to automate radiology report generation in hospitals. Include real-world examples from U.S. or European healthcare providers published in the last two years.”

It narrows down the topic to a specific application, sets a length, and requests current real-world data, improving relevance and depth.

Prompt engineers often create processes that need the model to follow complicated instructions or reasoning paths. Logical sequencing helps the model “think,” especially when it comes to chain-of-thought prompts or activities that require more than one step.

For example, if you want the model to answer a logic puzzle, a decent prompt may say:

“Start by identifying known facts, then eliminate impossible options, and finally deduce the correct answer.”

But it’s not just about crafting words — prompt engineers also need technical skills to scale and automate their work.

Equally important is an understanding of the AI systems themselves and the limitations they come with.

Finally, the most successful prompt engineers are those who embrace a mindset of experimentation.

Prompt engineering is still a field that is growing quickly. Engineers need to try things out, test their ideas, and be ready to make changes quickly when models or systems change. Curiosity isn’t just helpful; it’s necessary.

With the right skillset in place, prompt engineers can create real-world impact across a range of industries.

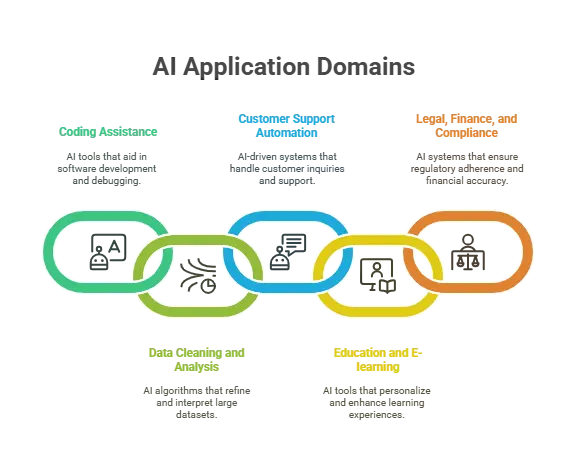

Writers, marketers, and producers utilize prompts to create everything from blog outlines to ad copy to video screenplays. A well-designed prompt may provide SEO-optimized material in seconds.

Example:

“Write a social media caption for a fitness app launch targeting millennials. Use an enthusiastic and energetic tone.”

But prompts aren’t limited to writing they’re also changing how developers code.

Prompts are commonly used by software engineers to produce functions, debug code, and even explain programming principles. Tools like GitHub Copilot and OpenAI Codex rely largely on prompt engineering to provide context-aware coding assistance.

Example:

“Write a Python function that removes duplicate entries from a list of dictionaries based on a key value.”

In data-intensive fields, prompts can be just as powerful for analysis and transformation.

Prompts are useful in data-heavy workflows for summarizing datasets, extracting insights, and even converting formats. When used with spreadsheets or databases, LLMs can function as intelligent data assistants.

Example:

“Summarize key sales trends from this data: [insert CSV snippet]. Highlight any regional spikes.”

AI-powered support agents also depend on carefully constructed prompts.

Chatbots driven by prompt-tuned LLMs resolve issues, give product information, and answer questions in a human-like conversation flow.

Example:

“When a customer asks for a refund, generate a response that explains the return policy and offers empathy.”

In the classroom, prompt engineering is helping educators personalize and scale learning materials.

Educators use prompt engineering to create tests, flashcards, study guides, and even replicate Socratic conversations.

Example:

“Generate five multiple-choice questions on Newton’s laws of motion, with one correct answer and three distractors.”

Even industries like finance and law are starting to use prompts to navigate complex documents.

Professionals in regulated industries utilize LLMs to summarize documents, identify legal clauses, and clarify technical jargon, with the appropriate prompts directing safe and accurate output.

Example:

“Summarize the key terms of this loan agreement in plain English, highlighting the repayment structure.”

These examples make it clear that behind every high-quality LLM output, there’s a skilled professional crafting the prompt.

Let’s close with some real-world roles that highlight the growing importance of this profession.

In Game Development:

Prompting AI to write character dialogue, quests, or game lore in a specific style.

This age of AI and machine learning will see the further evolution of prompt engineering. Prompts that let’s integrate text, code, and graphics into one will be available soon. Additionally, academics and engineers are creating context-specific adaptive prompts. Of course, triggers ensuring transparency and justice will probably emerge as AI ethics develop.

Generative AI enables machines to generate realistic content by analyzing data. A gen AI certification equips learners with expertise in deep learning, neural networks, and AI-driven innovation, opening doors to advanced career opportunities in artificial intelligence.

Embark on your journey to becoming a prompt engineer with our comprehensive guide – uncover the essential steps and skills needed for success.

For those considering a career in prompt engineering, several exciting opportunities may unfold:

Step into the future of AI by mastering prompt engineering. Develop the skills to create and optimize AI-driven interactions and unlock exciting Prompt engineering job opportunities in this cutting-edge field.

As AI continues to permeate various industries, the demand for professionals skilled in prompt engineering and related fields is likely to grow. This offers diverse career paths for individuals passionate about shaping the future of human-AI interactions.

That concludes our blog on what is prompt engineering. I hope you have understood prompt engineering and how to make a prompt very well with the help of this blog. If you’re interested in knowing more deeply about prompt engineering, here is the Prompt Engineering Course that will help you increase your understanding of the topic.

Q1. What is prompt engineering?

Prompt engineering involves creating effective inputs (prompts) to help AI models produce accurate and useful outputs.

Q2. Why is prompt engineering crucial?

Clear, organized instructions improve AI performance in customer assistance, data analysis, content development, and more.

Q3. Give a small, quick engineering example.

Q4. What are prompt-writing best practices?

Q5. What prompt engineering methods are used?

Related Posts:

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co