Informatica Certification Training Course

- 22k Enrolled Learners

- Weekend/Weekday

- Live Class

Talend simplifies the integration of big data and enables you to respond to business demands without writing complicated Hadoop code. In fact, ETL is a graphical abstraction layer on top of Hadoop applications, which makes life easier for professionals in the Big Data world.

This webinar video will walk you through the following:

The typical assertion is that Hadoop eliminates the need for ETL. What no one seems to question is the naive assumptions that these statements are based on. Is it realistic for most companies to move all of their data into Hadoop? Is writing ETL scripts in MapReduce code still ETL? The question isn’t really that are we eliminating ETL, but where does ETL take place and how are we changing its definition.

E: represents the ability to consistently and reliably extract data with high performance and minimal impact to the source system.

T: represents the ability to transform one or more data sets in batch or real-time into a consumable format.

L: stands for loading data into a persistent or virtual data store.

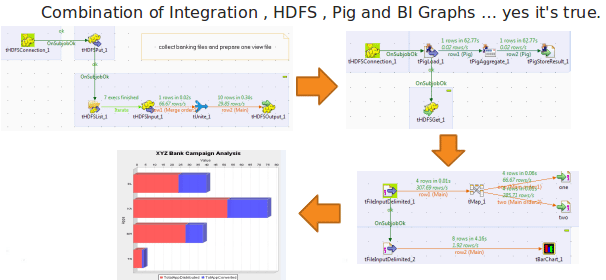

Let’s find out from the graphic below how the combination of ETL and Hadoop is helping solve major business issues:

ETL, an open-source ecosystem, is a one-stop Big Data solution. It helps:

As the world is getting more connected, a business must adapt quickly. Talend is the only Graphical User Interface tool which is capable enough to “translate” an ETL job to a MapReduce job. Thus, Talend ETL job gets executed as a MapReduce job on Hadoop and completes big data work within minutes .

This is a key innovation, which helps in reducing entry barriers in Big Data technology and allows ETL job developers (beginners as well as advanced) to carry out Data Warehouse offloading to a greater extent. With its eclipse-based graphical workspace, Talend Open Studio for Big Data enables a developer or a data scientist to leverage Hadoop loading and processing technologies like HDFS, HBase, Hive, and Pig without having to write Hadoop application code .

Hadoop applications seamlessly get integrated within minutes of using Talend.

For Hadoop applications to be truly accessible to your organization, they need to be smoothly integrated into your overall data flow:

Myth: I don’t know Java programming, how would this course help me learn and excel in Big Data? The biggest advantage you get with Talend for Big Data is “there is no prerequisite” to learn this concept. Whether you come with prior knowledge of Hadoop or not, this course has some best things to offer.

There is no prerequisites for learning Talend for Big Data. A professional from any background can can use it. The graphic below explains the same:

Let’s find out what Talend can do in minutes:

Banking industry use-case:

Addressing the challenges in growing the business with Big Data. We will use customer filled web-log data (collected by bank) and with the help of Pig-ETL job answer the question: “where should bank hold marketing campaigns for new product launch to get more business”, in ETL-Big Data Analytics style.

A Leading bank has initiated a new product launch campaign across cities. Post campaign, the bank wants to analyze the data collected to increase business and attract more customer. How quickly can the huge log files be analysed and make some business value out of it within seconds?

Talend can generate graphical interpretation of the business data giving tough time to Business Analytics tools.

Our use-case set up uses:

Is Talend similar to ETL in MSBI?

In terms of ETL it is similar, but in terms of flexibility and capability, there is always a third or fourth way of doing the same thing.

Does a company need Hadoop ecosystem established before investing in Talend?

Absolutely. Hadoop is an established platform, but everyone is not able able to scale it up. That’s the biggest challenge at the moment. You need the framework and the technology, which can get you working on Hadoop, HDFS, MapReduce programming as soon as possible.

What does MDM ESB stand for?

MDM stands for Master Data Management and ESB is the Enterprise service Buzz. For example, connecting to the internet, reading some protocol files are all a part of ESB. And Talend does act as an ESB layer.

Do we need to learn Java and Hadoop before venturing into Talend?

There is no prerequisite. Learning Java is not a necessity. It is also not necessary to learn Hadoop. If you know a few basic commands, it is good enough. Everything is done through Talend.

Here’s the PPT presentation:

Got a question for us? Mention them in the comment section and we will get back to you or join our Talend certification course today.

Related Posts:

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP