Python Programming Certification Course

- 66k Enrolled Learners

- Weekend

- Live Class

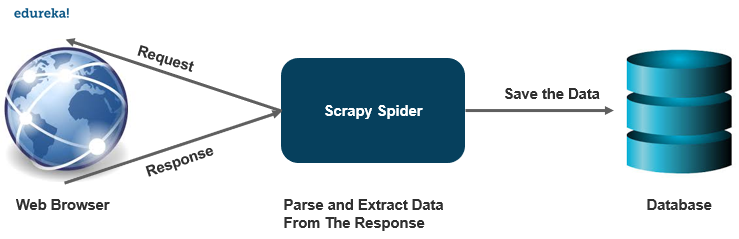

Web scraping is an effective way of gathering data from the webpages, it has become an effective tool in data science. With various python libraries present for web scraping like beautifulsoup, a data scientist’s work becomes optimal. Scrapy is a powerful web framework used for extracting, processing and storing data. We will learn how we can make a web crawler in this scrapy tutorial, following are the topics discussed in this blog:

Scrapy is a free and open-source web crawling framework written in python. It was originally designed to perform web scraping, but can also be used for extracting data using APIs. It is maintained by Scrapinghub ltd.

Scrapy is a complete package when it comes to downloading the webpages, processing and storing the data on the databases.

It is like a powerhouse when it comes to web scraping with multiple ways to scrape a website. Scrapy handles bigger tasks with ease, scraping multiple pages or a group of URLs in less than a minute. It uses a twister that works asynchronously to achieve concurrency.

It provides spider contracts that allow us to create generic as well as deep crawlers. Scrapy also provides item pipelines to create functions in a spider that can perform various operations like replacing values in data etc.

A web-crawler is a program that searches for documents on the web automatically. They are primarily programmed for repetitive action for automated browsing.

How it works?

A web-crawler is quite similar to a librarian. It looks for the information on the web, categorizes the information and then indexes and catalogs the information for the crawled information to be retrieved and stored accordingly.

The operations that will be performed by the crawler are created beforehand, then the crawler performs all those operations automatically which will create an index. These indexes can be accessed by an output software.

Let’s take a look at various applications a web-crawler can be used for:

Price comparison portals search for specific product details to make a comparison of prices on different platforms using a web-crawler.

A web-crawler plays a very important role in the field of data mining for the retrieval of information.

Data analysis tools use web-crawlers to calculate the data for page views, inbound and outbound links as well.

Crawlers also serve to information hubs to collect data such as news portals.

To install scrapy on your system, it is recommended to install it on a dedicated virtualenv. Installation works pretty similarly to any other package in python, if you are using conda environment, use the following command to install scrapy:

conda install -c conda-forge scrapy

you can also use the pip environment to install scrapy,

pip install scrapy

There might be a few compilation dependencies depending on your operating system. Scrapy is written in pure python and may depend on a few python packages like:

lxml – It is an efficient XML and HTML parser.

parcel – An HTML/XML extraction library written on top on lxml

W3lib – It is a multi-purpose helper for dealing with URLs and webpage encodings

twisted – An asynchronous networking framework

cryptography – It helps in various network-level security needs

To start your first scrapy project, go to the directory or location where you want to save your files and execute the following command

scrapy startproject projectname

After you execute this command, you will get the following directories created on that location.

projectname/

scrapy.cfg: it deploys configuration file

projectname/

__init__.py: projects’s python module

items.py: project items definition file

middlewares.py: project middlewares file

pipelines.py: project pipelines file

settings.py: project settings file

spiders/

__init__.py: a directory where later you will put your spiders

Spiders are classes that we define and scrapy uses to gather information from the web. You must subclass scrapy.Spider and define the initial requests to make.

You write the code for your spider in a separate python file and save it in the projectname/spiders directory in your project.

quotes_spider.py

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

def start_request(self):

urls = [ 'http://quotes.toscrape.com/page/1/',

http://quotes.toscrape.com/page/2/,

]

for url in urls:

yield scrapy.Request(url=url , callback= self.parse)

def parse(self, response):

page = response.url.split("/")[-2]

filename = 'quotes-%s.html' % page

with open(filename, 'wb') as f:

f.write(response.body)

self.log('saved file %s' % filename)

As you can see, we have defined various functions in our spiders,

name: It identifies the spider, it has to be unique throughout the project.

start_requests(): Must return an iterable of requests which the spider will begin to crawl with.

parse(): It is a method that will be called to handle the response downloaded with each request.

Until now the spider does not extract any data, it just saved the whole HTML file. A scrapy spider typically generates many dictionaries containing the data extracted from the page. We use the yield keyword in python in the callback to extract the data.

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [ http://quotes.toscrape.com/page/1/',

http://quotes.toscrape.com/page/2/,

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css(span.text::text').get(),

'author': quote.css(small.author::text')get(),

'tags': quote.css(div.tags a.tag::text').getall()

}

When you run this spider, it will output the extracted data with the log.

The simplest way to store the extracted data is by using feed exports, use the following command to store your data.

scrapy crawl quotes -o quotes.json

This command will generate a quotes.json file containing all the scraped items, serialized in JSON.

This brings us to the end of this article where we have learned how we can make a web-crawler using scrapy in python to scrape a website and extract the data into a JSON file. I hope you are clear with all that has been shared with you in this tutorial.

If you found this article on “Scrapy Tutorial” relevant, check out the Edureka Python Certification Training, a trusted online learning company with a network of more than 250,000 satisfied learners spread across the globe.

We are here to help you with every step on your journey and come up with a curriculum that is designed for students and professionals who want to be a Python developer. The course is designed to give you a head start into Python programming and train you for both core and advanced Python concepts along with various Python frameworks like Django.

If you come across any questions, feel free to ask all your questions in the comments section of “Scrapy Tutorial” and our team will be glad to answer.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co