Microsoft Azure Data Engineering Training Cou ...

- 16k Enrolled Learners

- Weekend/Weekday

- Live Class

Dataframes is a buzzword in the Industry nowadays. People tend to use it with popular languages used for Data Analysis like Python, Scala and R. Plus, with the evident need for handling complex analysis and munging tasks for Big Data, Python for Spark or PySpark Certification has become one of the most sought-after skills in the industry today. So, why is it that everyone is using it so much? Let’s understand this with our PySpark Dataframe Tutorial blog. In this blog I’ll be covering the following topics:

Dataframes generally refers to a data structure, which is tabular in nature. It represents Rows, each of which consists of a number of observations. Rows can have a variety of data formats (Heterogeneous), whereas a column can have data of the same data type (Homogeneous). Data frames usually contain some metadata in addition to data; for example, column and row names.

We can say that Dataframes are nothing, but 2-Dimensional Data Structure, similar to an SQL table or a spreadsheet. Now let’s move ahead with this PySpark Dataframe Tutorial and understand why exactly we need Pyspark Dataframe?

1.Processing Structured and Semi-Structured Data

Dataframes are designed to process a large collection of structured as well as Semi-Structured data. Observations in Spark DataFrame are organized under named columns, which helps Apache Spark to understand the schema of a DataFrame. This helps Spark optimize execution plan on these queries. It can also handle Petabytes of data.

2.Slicing and Dicing

Data frame APIs usually supports elaborate methods for slicing-and-dicing the data. It includes operations such as “selecting” rows, columns, and cells by name or by number, filtering out rows, etc. Statistical data is usually very messy and contain lots of missing and wrong values and range violations. So a critically important feature of data frames is the explicit management of missing data.

3.Data Sources

DataFrame has a support for a wide range of data format and sources, we’ll look into this later on in this Pyspark Dataframe Tutorial blog. They can take in data from various sources.

4.Support for Multiple Languages

It has API support for different languages like Python, R, Scala, Java, which makes it easier to be used by people having different programming backgrounds.

Dataframes in Pyspark can be created in multiple ways:

Data can be loaded in through a CSV, JSON, XML or a Parquet file. It can also be created using an existing RDD and through any other database, like Hive or Cassandra as well. It can also take in data from HDFS or the local file system.

Let’s move forward with this PySpark Dataframe Tutorial blog and understand how to create Dataframes.

We’ll create Employee and Department instances.

from pyspark.sql import *

Employee = Row("firstName", "lastName", "email", "salary")

employee1 = Employee('Basher', 'armbrust', 'bash@edureka.co', 100000)

employee2 = Employee('Daniel', 'meng', 'daniel@stanford.edu', 120000 )

employee3 = Employee('Muriel', None, 'muriel@waterloo.edu', 140000 )

employee4 = Employee('Rachel', 'wendell', 'rach_3@edureka.co', 160000 )

employee5 = Employee('Zach', 'galifianakis', 'zach_g@edureka.co', 160000 )

print(Employee[0])

print(employee3)

department1 = Row(id='123456', name='HR')

department2 = Row(id='789012', name='OPS')

department3 = Row(id='345678', name='FN')

department4 = Row(id='901234', name='DEV')

Next, we’ll create a DepartmentWithEmployees instance from the Employee and Departments

departmentWithEmployees1 = Row(department=department1, employees=[employee1, employee2, employee5]) departmentWithEmployees2 = Row(department=department2, employees=[employee3, employee4]) departmentWithEmployees3 = Row(department=department3, employees=[employee1, employee4, employee3]) departmentWithEmployees4 = Row(department=department4, employees=[employee2, employee3])

Let’s create our Dataframe from the list of Rows

departmentsWithEmployees_Seq = [departmentWithEmployees1, departmentWithEmployees2] dframe = spark.createDataFrame(departmentsWithEmployees_Seq) display(dframe) dframe.show()

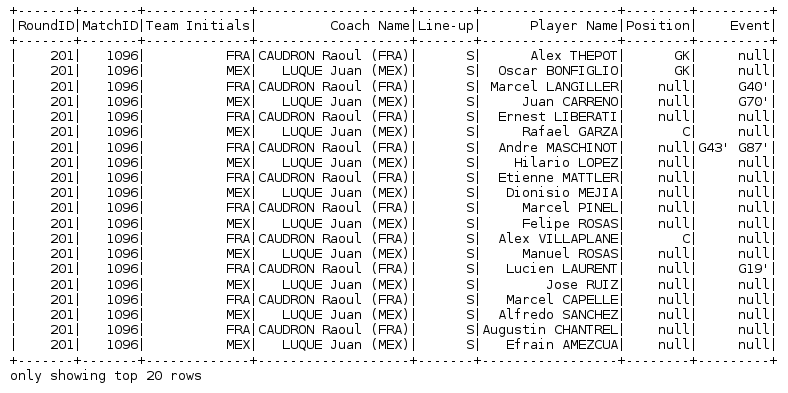

Here we have taken FIFA World Cup Players Dataset. We are going to load this data which is in CSV format into a dataframe and then we’ll learn about the different transformations and actions that can be performed on this dataframe.

Let’s load the data from a CSV file. Here we are going to use the spark.read.csv method to load the data into a dataframe fifa_df. The actual method is spark.read.format[csv/json].

fifa_df = spark.read.csv("path-of-file/fifa_players.csv", inferSchema = True, header = True)

fifa_df.show()

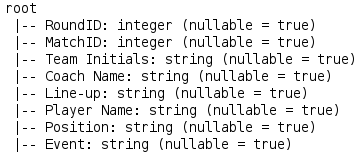

To have a look at the schema ie. the structure of the dataframe, we’ll use the printSchema method. This will give us the different columns in our dataframe along with the data type and the nullable conditions for that particular column.

fifa_df.printSchema()

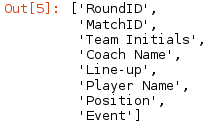

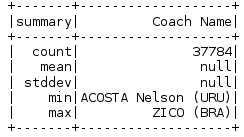

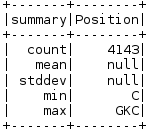

When we want to have a look at the names and a count of the number of Rows and Columns of a particular Dataframe, we use the following methods.

fifa_df.columns //Column Names fifa_df.count() // Row Count len(fifa_df.columns) //Column Count

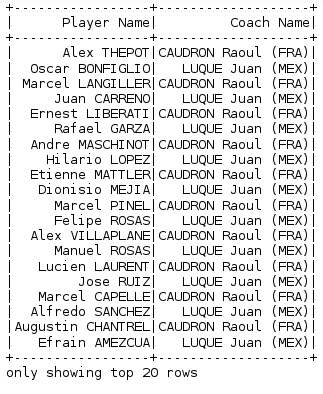

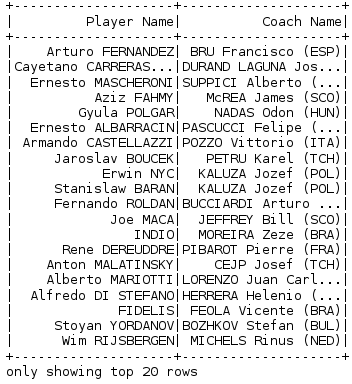

If we want to select particular columns from the dataframe, we use the select method.

fifa_df.select('Player Name','Coach Name').show()

fifa_df.select('Player Name','Coach Name').distinct().show()

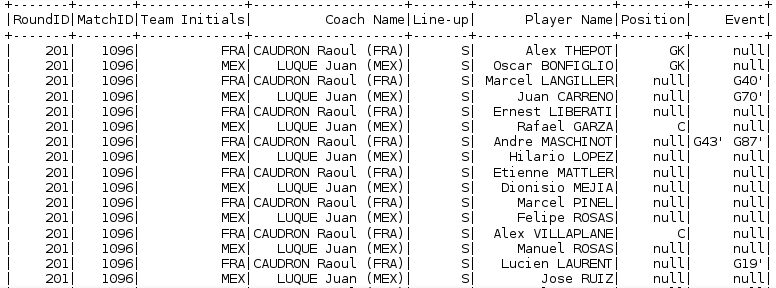

In order to filter the data, according to the condition specified, we use the filter command. Here we are filtering our dataframe based on the condition that Match ID must be equal to 1096 and then we are calculating how many records/rows are there in the filtered output.

fifa_df.filter(fifa_df.MatchID=='1096').show() fifa_df.filter(fifa_df.MatchID=='1096').count() //to get the count

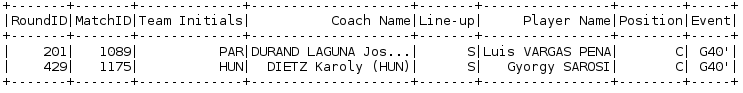

We can filter our data based on multiple conditions (AND or OR)

fifa_df.filter((fifa_df.Position=='C') && (fifa_df.Event=="G40'")).show()

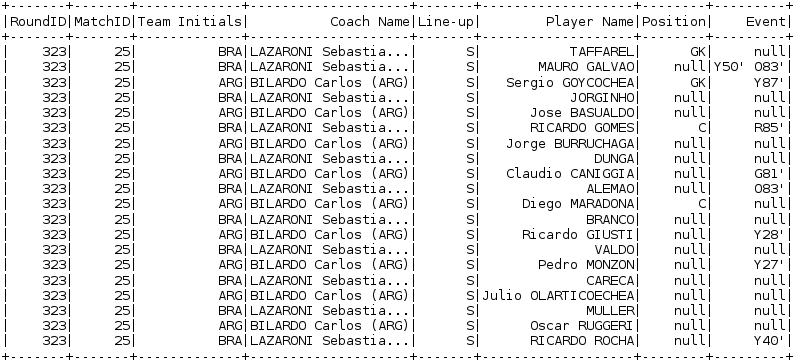

To sort the data we use the OrderBy method. By Default, it sorts in ascending order, but we can change it to descending order as well.

fifa_df.orderBy(fifa_df.MatchID).show()

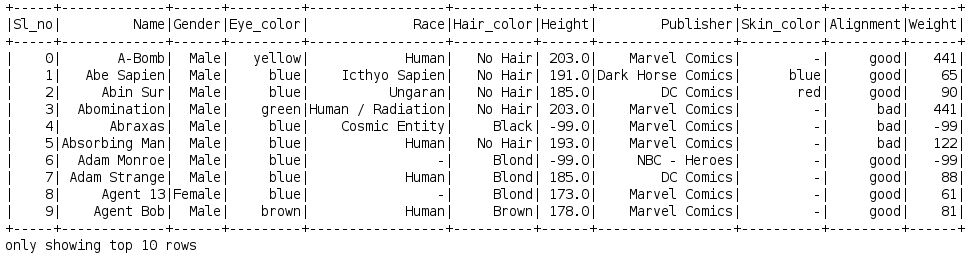

Here we will load the data in the same way as we did earlier.

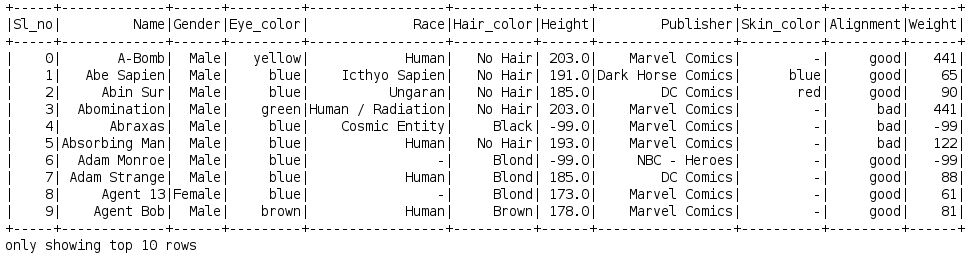

Superhero_df = spark.read.csv("path-of file/superheros.csv", inferSchema = True, header = True)

Superhero_df.show(10)

Superhero_df.filter(Superhero_df.Gender == 'Male').count() //Male Heros Count Superhero_df.filter(Superhero_df.Gender == 'Female').count() //Female Heros Count

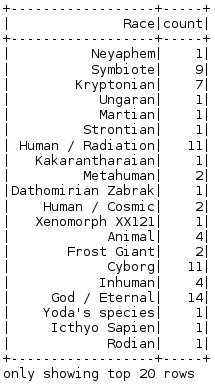

GroupBy is used to group the dataframe based on the column specified. Here, we are grouping the dataframe based on the column Race and then with the count function, we can find the count of the particular race.

Race_df = Superhero_df.groupby("Race")

.count()

.show()

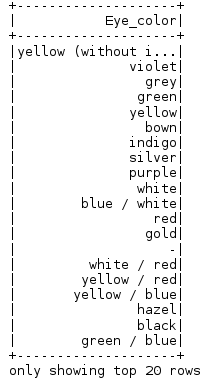

We can also pass SQL queries directly to any dataframe, for that we need to create a table from the dataframe using the registerTempTable method and then use the sqlContext.sql() to pass the SQL queries.

Superhero_df.registerTempTable('superhero_table')

sqlContext.sql('select * from superhero_table').show()

sqlContext.sql('select distinct(Eye_color) from superhero_table').show()

sqlContext.sql('select distinct(Eye_color) from superhero_table').count()

23

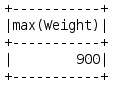

sqlContext.sql('select max(Weight) from superhero_table').show()

And with this, we come to an end of this PySpark Dataframe Tutorial.

So This is it, Guys!

I hope you guys got an idea of what PySpark Dataframe is, why is it used in the industry and its features in this PySpark Dataframe Tutorial Blog. Congratulations, you are no longer a Newbie to Dataframes. If you want to learn more about PySpark and Understand the different Industry Use Cases, have a look at our Spark with Python and PySpark Tutorial Blog.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co