Microsoft Azure Data Engineering Training Cou ...

- 16k Enrolled Learners

- Weekend/Weekday

- Live Class

Apache Spark is the most powerful, flexible, and a standard for in-memory data computation capable enough to perform Batch-Mode, Real-time and Analytics on the Hadoop Platform. This integrated part of Cloudera is the highest-paid and trending technology in the current IT market.

Apache spark is the multi-role jet fighter in combat with the colossal loads of Big-Data in Data Analytics. It is capable to deal with almost all types of data irrelevant to its structure and size with lightning speeds. Here are a few reasons for which the Spark is considered the most powerful Big Data tool.

Spark can be directly integrated on to Hadoop’s HDFS and work as an excellent Data Processing Tool. Coupled with YARN, It can run on the same cluster along the side of MapReduce Jobs.

Learning spark has become one of the global standards as there is an impeccable raise in the world of Big Data Analytics with Apache Spark standing by its side.

There is a lot of performance gap when it comes to deciding between MapReduce and Spark. The lightning-fast performance due to its In-Memory Processing Capability brought Spark its place amongst the top-level Apache Projects.

The simple and faster programming interface of spark can support top-notch programming languages like Scala, Java and Python. This gave a staggering edge to Spark to be the leading legend in the Production Environment with a massive surge in its demand.

Due to its Outperformed Capabilities and reliability, Spark is preferred by many Top MNCs like Adobe, Yahoo, NASA and many more. Proportionately, the demand for Spark Developers is also visualizing a rapid rise.

To know more about the Spark importance in the current IT market, please go through this article

Apache Spark is the Open-Source software utility by the Apache Foundation. It was designed and deployed as an upgrade to Apache Hadoop’s processing capabilities. unlike the common myth, Apache Spark is never the replacement to Hadoop. It is another processing layer like MapReduce.

Now, the definition. Apache Spark is the lightning-fast cluster computing framework that provides the interface to program the entire cluster so as to achieve implicit data parallelism and fault-tolerance.

There is a thin line of the gap between actually becoming a certified Apache Spark Developer and to be an actual Apache Spark Developer capable enough perform in the real-time application.

and a lot more

Redefine your data analytics workflow and unleash the true potential of big data with Pyspark Course. And also upgrade your skills with Microsoft Fabric Data Engineer Associate Training Program

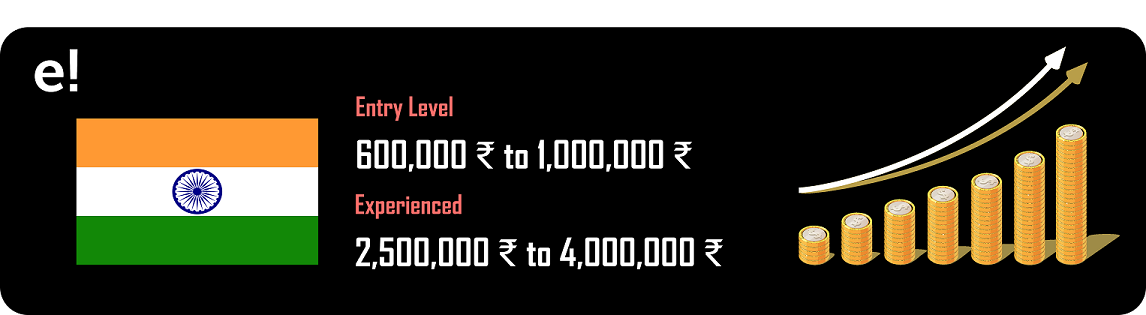

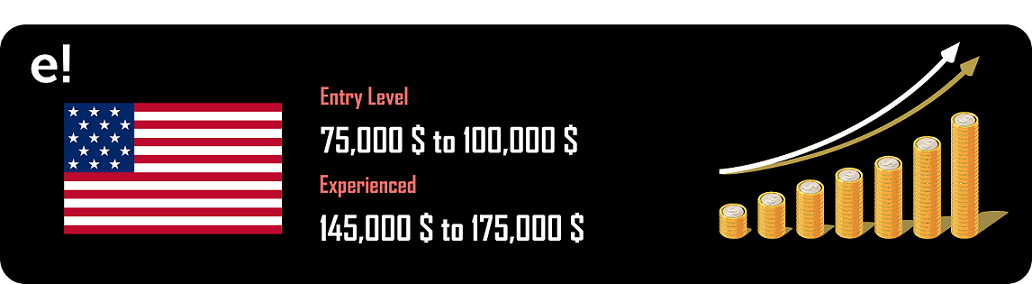

Apache Spark Developers is one of the most highly decorated professionals with handsome salary packages compared to others. We will now discuss the salary trends of Apache Spark Developers in different nations.

First, India.

In India, the average salary offered to an entry-level Spark Developer is in between 600,000₹ to 1,000,000₹ per annum. On the other hand, for an experienced level Spark Developer, the salary trends are in between 2,500,000₹ to 4,000,000₹ per annum.

Next, in the United States of America, the salary offered for a beginner level Spark Developer is 75,000$ to 100,000$ per annum. Similarly, for an experienced level Spark developer, the salary trends are in between 145,000$ to 175,000$ per annum.

Now, let us understand the skills, roles and responsibilities of an Apache Spark Developer.

Apache Spark is one of the widest spread technology that changed the faces of many IT industries and helped them to achieve their current accomplishments and further. Let us now discuss some of the Tech Giants and Major Players in the IT industry that are in the use of Spark.

So, with this, we come to an end of this “How to become a Spark Developer?” article. I hope we sparked a little light upon your knowledge about Spark, Scala and Hadoop along with CCA-175 certification features and its importance.

This article based on Apache Spark Certification training are designed to prepare you for the Cloudera Hadoop and Spark Developer Certification Exam (CCA175). You will get in-depth knowledge on Apache Spark and the Spark Ecosystem, which includes Spark RDD, Spark SQL, Spark MLlib and Spark Streaming. You will get comprehensive knowledge on Scala Programming language, HDFS, Sqoop, Flume, Spark GraphX and Messaging System such as Kafka.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co