DevOps Certification Training Course with Gen ...

- 190k Enrolled Learners

- Weekend

- Live Class

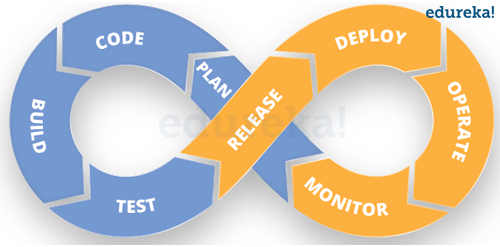

Many of you might be aware of all the theories related to DevOps. But do you know how to implement DevOps principles in real life? In this blog, I will discuss the DevOps Real Time scenarios that will help you get a brief understanding of how things work in real-time.

So let us begin with our first topic.

In the next section of this DevOps Real Time Scenarios article, we will take a look on the various problems solved by DevOps.

The most important value realized through DevOps is that it allows IT organizations to focus on their “core” business activities. By removing constraints within the value stream and automating deployment pipelines, teams can focus on the activities. This helps in creating customer value rather than just moving bits and bytes. These activities increase the sustainable competitive advantage of a company and create better business outcomes.

Internally DevOps is the only way to achieve the agility to deliver secure code with insights. It is important to have gates and a well-crafted DevOps process. When you are delivering a new version, it can run side-by-side with the current version. You can also compare metrics to accomplish what you wanted to with application and performance metrics.

DevOps drive development teams towards continuous improvement and faster release cycles. If done well, this iterative process allows more focus over time, on the things that really matter. Such as things that create great experiences for users – and less time on managing tools, processes, and tech.

The most important problem being solved is the reduction of the complexity of the process. This contributes significantly towards our business success by shortening our time to market, giving us quick feedback on features, and making us more responsive to our customers’ needs.

The greatest value of successful DevOps implementation is higher confidence in delivery, visibility, and traceability to what’s going on, so you can solve problems quicker.

Another important advantage of DevOps is not wasting any time. Aligning an organization’s people and resources enables rapid deployments and updates. This allows DevOps programs to fix problems before they turn into disasters. DevOps creates a culture of transparency that promotes focus and collaboration among development, operations, and security teams.

Learn at your own pace with the flexible AWS DevOps Course Online.

Members of a development team have different roles, responsibilities, and priorities. It is possible that the Product manager’s first priority might be launching new features, project manager’s have to make sure that their team meets the deadline. Programmers might think that if they stop to fix a minor bug every time it occurs will slow them down. They might feel keeping the build clean is an extra burden on them and they won’t be the benefitted for their extra efforts. This can potentially jeopardize the adaptation process.

To overcome this:

Firstly, make sure your whole team is on board before you adopt continuous integration.

CTOs and team leaders must help the team members understand the costs and benefits of continuous integration.

Highlight what and when coders will be benefitted by dedicating themselves to a different working method that requires a bit more openness and flexibility.

Adopting CI inevitably comes with the need for essentially changing some parts of your development workflow. It is possible that your developers might not fix the workflow if it isn’t broken. This is possible mainly if your team has a bigger routine in executing their current workflow.

If you wish to change the workflow then you must do it with great precautions. Otherwise, it could compromise the productivity of the development team and also the quality of the product. Without sufficient support from the leadership, the development team might be a bit reluctant to undertake a task with such risks involved.

To overcome this:

You must make sure that you give enough time for your team to develop their new workflow. This is done in order to select a flexible continuous integration solution that can support their new workflow.

Also, ensure them that company has their backs even if things might not go very smoothly at the beginning.

The immediate effect of adopting continuous integration is that your team will test more often. So more tests will need more test cases and writing test cases can be time-consuming. Hence, developers often need to divide their time between fixing bugs and writing test cases.

Temporarily, developers might be able to save time by manually testing, but it might hurt more in the long run. The more they procrastinate writing test cases, the more difficult it will become to catch up on the progress of the development. In the worst-case scenario, your team might end up going back to their old testing process.

To overcome this:

You must emphasize that writing test cases from the beginning could save a lot of time for your team and can ensure high test coverage of your product.

Also, embed the idea in your company culture that test cases are as valuable assets as the codebase itself.

It is a common problem that when bigger teams work together the amount of CI notifications becomes overwhelming and developers start ignoring and muting them. Therefore, it is possible that they might miss the updates that are relevant to them.

It can lead to a stage where coders develop a relative immunity to broken builds and error messages. The longer they ignore relevant notifications, the longer they develop without feedback in the wrong direction. This could potentially cause huge rollbacks, wastage of money, resources, and time.

To overcome this:

Only send the notification to respective developers who are in charge of fixing it.

This Edureka session on ‘DevOps Real Time Scenarios’ will discuss the various real time Challenges that you encount…

If you get your requirements right then almost half of the battle is won. So if you have a very specific and accurate understanding of requirements, you can design test plans better and cover requirements well.

Yet, many teams spend a lot of time and effort simply clarifying the requirements. This is a very common pitfall and to avoid this, teams can adopt Model-based testing and Behavior-Driven Development techniques. This helps to design test scenarios accurately and adequately.

These practices will definitely help address and resolve the gaps more quickly. Also, it enables them to generate more test cases automatically right from the early stages of a sprint.

The advantages of continuous testing and continuous delivery are closely tied to pipeline orchestration. This directly means understanding how it works, why it works, how to analyze the results, and how and when to scale. Everything depends on the pipeline and hence you need to integrate the pipeline with the automation suite.

But the reason teams fumble is that, no single solution provides the complete toolchain that is required to build a CD pipeline.

Teams have to typically search for the pieces of the puzzle that are correct for them. There are no perfect tools, typically only best-of-breed tools, that provide integrations along with multiple other tools. And of course, an API that permits easy integrations as well.

In short, it is impossible to implement continuous testing without the speed and reliability of a standardized and automated pipeline.

Another important scenario is that continuous testing becomes more complex as it moves towards the production environment. The tests grow in number as well as complexity with the maturing code and the environment becoming more complex.

You must update tests each time you update different phases and automated scripts. As a result, the overall time it takes to run the tests also tends to increase towards the release.

The solution for this lies in improved test orchestration that provides the right amount of test coverage in shorter sprint cycles and enables teams to deliver confidently. Ideally, the entire process must be automated with CT carried out at various stages. This is done by using policy gates and manual intervention, up until the code is pushed to production.

Without frequent feedback loops at every stage of the development cycle, continuous testing is not possible. This is partly the reason why CT is difficult to implement. You don’t just need automated tests, but you also need visibility of the test results and execution.

Traditional feedback loops like logging tools, code profilers, and performance monitoring tools are not effective anymore. Neither they work together nor provide the depth of insight required to fix issues. Real-time dashboards that generate reports automatically and actionable feedback across the entire SDLC helps release software faster into production with lesser defects. Real-time access to dashboards and access for all team members helps the continuous feedback mechanism.

Continuous Testing simply means testing more often and this requires hitting multiple environments more frequently. This presents a bottleneck if the said environments are not available at the time they are required. Some environments are available through APIs and some through various interfaces. Some of these environments can be built using modern architecture while others with monolithic legacy client/server or mainframe systems.

But the question here is how do you coordinate testing through the various environment owners? It is also possible that they may not always keep the environments up and running. The answer to all this is Virtualization. By virtualizing the environment, you can test the code without worrying too much about areas that are unchanging. Making the environments accessible and available on-demand through virtualization surely helps remove a significant bottleneck from your pipeline.

Distributed applications normally require more than ‘copying and pasting’ files to a server. The complexity tends to increase if you have a farm of servers. Uncertainty about what to deploy, where, and how, is a pretty normal thing. The result? Long waiting times to get our artifacts into the next environment of the route to delaying everything, testing, time to live, etc.

What does DevOps bring to the table? Development and IT operations teams define a deployment process in a blameless collaboration session. First, they verify what works and then take it to the next level with automation to facilitate continuous delivery. This drastically cuts timing for deployment; it also paves the way for more frequent deployments.

We frequently encounter failures post the deployment of a new version of a working piece of software. This is often caused by missing libraries or database scripts not being updated. This is usually caused by a lack of clarity about which dependencies to deploy and their location. Fostering collaboration between development and operations can help resolve these sorts of problems in the majority of cases.

When it comes to automation, you can define dependencies which helps a lot in speeding up deployments. Configuration management tools like Puppet or Chef contribute with an extra level of definition of dependencies. We can define not only dependencies within our application but also at the infrastructure and server configuration level. For example, we can create a virtual machine for a test, and install/configure tomcat before our artifacts are published.

Sometimes you configure monitoring tools in a way that produces a lot of irrelevant data from production, however, other times they don’t produce enough or nothing at all. There is no definition of what you need to look after and what the metrics are.

You must agree on what to monitor and which information to produce, and then put controls in place. Application Performance management tools are a great help if your organization can afford it take a look at AppDynamics, New Relic and AWS X-Ray.

DevOps is all about eliminating the risks associated with new software development: Data analysis identifies those risks. To continuously measure and improve upon the DevOps process, analytics should span across the entire pipeline. This provides invaluable insights to management at all stages of the software development lifecycle.

With all the data that is generated at any given time, organizations need to accept that they can’t analyze it all. There’s simply not enough time in the day – and unfortunately, robots aren’t quite sophisticated enough to do it all for us quite yet.

For that reason, it’s important to determine which data sets are most significant. In most cases, this is going to be different for every organization. So before diving in, determine key business objectives and goals. Typically, these goals revolve around customer needs – primarily the most valuable features that are most important to end-users. For a retailer, for example, analyzing how traffic is interacting with the checkout page on the site and testing how it works in the back-end is at the top of the list.

Some quick tips to identify which data is most important to analyze:

Make a chart: Determine the impact outages will have on your business, asking questions such as, “If X breaks, what effect will it have on other features?”

Look at historical data: Identify where issues have arisen in the past and continue to analyze data from tests and build to ensure it doesn’t happen again.

Today, most organizations still operate with different teams and personas identifying their own goals and utilizing their own tools and technologies. Each team acts independently, disconnected from the pipeline and meeting with other teams only during the integration phase.

When it comes to looking at the bigger picture and identifying what is and isn’t working, the organization struggles to come to one solution. This is because mostly because everyone is failing to share the overall data, making analysis impossible.

To overcome this issue, overhaul the flow of communication to ensure everyone is collaborating throughout the SDLC, not just during the integration process.

First, make sure there’s strong synchronization on DevOps metrics from the get-go. Each team’s progress should be displayed in one single dashboard, utilizing the same Key Performance Indicators (KPIs) to give management visibility into the entire process. This is done so that they can collect all the necessary data to analyze what went wrong (or what succeeded).

Beyond the initial metrics conversation, there should be constant communication via team meetings or digital channels like Slack.

When short-staffed, we need smarter tools that utilize deep learning to slot in the data we’re collecting and reach decisions quickly. After all, nobody has time to look at every single test execution (and for some big organizations, there can be about 75,000 in a given day). The trick is to eliminate the noise and find the right things to focus on.

This is where artificial intelligence and machine learning can help. Many tools on the market today utilize AI and ML to do things like:

Develop scripts and tests to move and validate different pieces of data

Report on quality based on previously learned behaviors

Work in response to real-time changes.

So with this, we have come to the end of this article on DevOps Real Time Scenarios.

Now that you have understood what DevOps Real Time Scenarios are, check out this DevOps Training with Gen AI by Edureka, a trusted online learning company with a network of more than 250,000 satisfied learners spread across the globe. The Edureka PG Program in DevOps Training course helps learners to understand what is DevOps and gain expertise in various DevOps processes and tools such as Puppet, Jenkins, Nagios, Ansible, Chef, Saltstack and GIT for automating multiple steps in SDLC. You can also check out our DevOps Engineer Course. It will help you to gain expertise in DevOps tools.

Got a question for us? Please mention it in the comments section of this DevOps Real Time Scenarios article and we will get back to you.

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co