Agentic AI Certification Training Course

- 127k Enrolled Learners

- Weekend/Weekday

- Live Class

Agentic AI is revolutionizing industries by allowing intelligent systems to plan autonomously, recall context, and work smoothly with technologies such as APIs and databases. For example, in customer service, Agentic AI may proactively troubleshoot problems by accessing long-term client data and coordinating multiple services without human interaction. As this technology influences the future of AI, understanding its fundamentals is critical for anybody preparing for interviews in this rapidly expanding industry. Below is a quick comparison of Agentic AI and regular chatbots.

Agentic AI refers to AI systems that possess the autonomy to perceive their environment, make decisions, and take actions to achieve specific goals. Unlike traditional AI, which often operates based on predefined rules or patterns, Agentic AI systems can adapt to new situations, learn from experiences, and make decisions without explicit human intervention.

Key Differences:

Autonomy: Agentic AI systems can operate independently, making decisions based on their understanding of the environment. Traditional AI systems typically require human input for decision-making.

Adaptability: Agentic AI can learn from new data and experiences, adjusting its behavior accordingly. Traditional AI systems often lack this level of adaptability.

Goal-Oriented Behavior: Agentic AI is designed to achieve specific objectives, often involving complex sequences of actions. Traditional AI may perform tasks without a clear understanding of overarching goals.

Example:

Consider a self-driving car:

Traditional AI: Might use pattern recognition to identify stop signs and traffic lights but relies on predefined responses.

Agentic AI: Can navigate through traffic, make decisions at intersections, reroute based on traffic conditions, and learn from new driving scenarios to improve future performance.

Agentic AI has a wide range of applications across various industries:

Customer Service:

Application: AI agents handle customer inquiries, complaints, and support tickets autonomously.

Example: An AI agent that processes customer emails, identifies the issue, and provides a resolution without human intervention.

Healthcare:

Application: AI agents assist in diagnosing diseases, recommending treatments, and monitoring patient health.

Example: An AI system that analyzes patient data to predict potential health risks and suggests preventive measures.

Finance:

Application: AI agents manage investment portfolios, detect fraudulent activities, and provide financial advice.

Example: An AI agent that monitors transactions for unusual patterns indicative of fraud and takes immediate action.

Manufacturing:

Application: AI agents oversee production lines, predict equipment failures, and optimize supply chains.

Example: An AI system that adjusts manufacturing schedules in real-time based on demand fluctuations.

Education:

Application: AI agents provide personalized learning experiences, assess student performance, and suggest improvements.

Example: An AI tutor that adapts its teaching style based on a student’s learning pace and comprehension.

Several tools and platforms support the development and deployment of Agentic AI systems:

LangChain:

Description: A framework for developing applications powered by language models.

Use Case: Building chatbots, question-answering systems, and more.

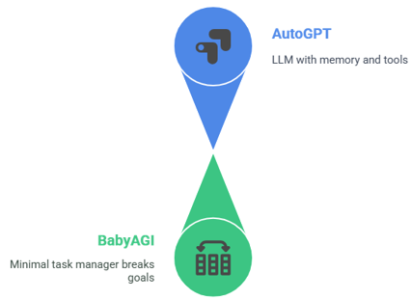

AutoGPT:

Description: An experimental open-source application showcasing the capabilities of GPT-4 to autonomously achieve goals.

Use Case: Automating complex tasks without human intervention.

BabyAGI:

Description: A Python script that uses OpenAI and vector databases to create and manage tasks.

Use Case: Demonstrating task management and execution by AI agents.

ReAct (Reasoning and Acting):

Description: A framework combining reasoning and acting for language agents.

Use Case: Enhancing decision-making capabilities of AI agents.

AgentGPT:

Description: A platform to deploy autonomous AI agents in the browser.

Use Case: Experimenting with AI agents without extensive setup.

Large Language Models (LLMs) are foundational to many Agentic AI systems. Some commonly used LLMs include:

OpenAI’s GPT-4:

Features: Advanced language understanding and generation capabilities.

Use Case: Chatbots, content creation, code generation.

Google’s PaLM:

Features: Multilingual understanding, reasoning, and code generation.

Use Case: Translation services, summarization, question answering.

Anthropic’s Claude:

Features: Focus on safety and interpretability in AI responses.

Use Case: Applications requiring ethical considerations and transparency.

Meta’s LLaMA:

Features: Open-source models optimized for research purposes.

Use Case: Academic research, experimentation in AI applications.

Cohere’s Command R+:

Features: Retrieval-augmented generation capabilities.

Use Case: Applications needing integration of external knowledge sources.

An Agentic AI system typically comprises the following components:

Perception Module:

Function: Gathers data from the environment through sensors or data inputs.

Reasoning Engine:

Function: Processes the perceived data to make informed decisions.

Planning Module:

Function: Develops a sequence of actions to achieve specific goals.

Action Module:

Function: Executes the planned actions in the environment.

Learning Module:

Function: Learns from experiences to improve future performance.

Memory:

Function: Stores past experiences, knowledge, and data for future reference.

Example:

In a warehouse automation scenario:

Perception Module: Scans inventory levels.

Reasoning Engine: Determines which items need restocking.

Planning Module: Schedules restocking tasks.

Action Module: Directs robots to move items.

Learning Module: Analyzes efficiency and adjusts future plans.

Memory: Keeps records of inventory movements and patterns.

Ethical considerations are paramount in the development and deployment of Agentic AI systems:

Transparency:

Ensuring AI decisions are explainable and understandable to users.

Accountability:

Defining responsibility for AI actions, especially in cases of errors or harm.

Bias and Fairness:

Preventing discriminatory outcomes by addressing biases in data and algorithms.

Privacy:

Safeguarding user data and ensuring compliance with data protection regulations.

Autonomy:

Balancing AI autonomy with human oversight to prevent unintended consequences.

Security:

Protecting AI systems from malicious attacks and ensuring robustness.

Example:

An AI hiring tool must be designed to avoid biases that could lead to unfair treatment of candidates based on gender, race, or other protected attributes.

Deploying AI agents introduces several security risks:

Data Breaches:

Unauthorized access to sensitive data processed by AI agents.

Adversarial Attacks:

Manipulating AI inputs to cause incorrect outputs or behaviors.

Model Inversion:

Extracting sensitive information from AI models.

Unauthorized Actions:

AI agents performing unintended actions due to flawed decision-making.

Dependency Exploits:

Vulnerabilities in third-party libraries or APIs used by AI agents.

Mitigation Strategies:

Implement robust authentication and authorization mechanisms.

Regularly update and patch AI systems.

Conduct security audits and penetration testing.

Employ monitoring systems to detect and respond to anomalies.

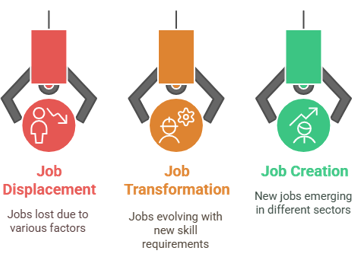

AI has the potential to automate certain tasks, leading to the transformation of job roles rather than outright replacement. While some repetitive or routine jobs may be automated, new opportunities emerge in areas such as AI oversight, maintenance, and development.

Considerations:

Job Displacement: Roles involving repetitive tasks are more susceptible to automation.

Job Transformation: Many jobs will evolve, requiring new skills and adaptability.

Job Creation: AI development and maintenance create new employment opportunities.

Example:

In manufacturing, AI-powered robots may handle assembly line tasks, but human workers are needed to oversee operations, handle complex tasks, and maintain equipment.

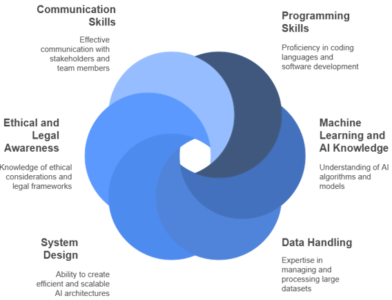

Developing Agentic AI systems requires a multidisciplinary skill set:

Programming Skills:

Proficiency in languages like Python, Java, or C++.

Machine Learning and AI Knowledge:

Understanding algorithms, model training, and evaluation.

Data Handling:

Skills in data preprocessing, analysis, and management.

System Design:

Ability to architect complex systems integrating various components.

Ethical and Legal Awareness:

Understanding the ethical implications and legal requirements of AI deployment.

Communication Skills:

Effectively conveying technical concepts to non-technical stakeholders.

Example:

An AI engineer working on an autonomous vehicle must integrate sensor data processing, real-time decision-making algorithms, and ensure compliance with safety regulations.

Building an AI Agent involves several key steps:

Define Objectives:

Clearly outline the tasks the agent should perform.

Example: Automate customer support responses.

Select Appropriate Tools and Frameworks:

Choose platforms like LangChain, Auto-GPT, or BabyAGI based on project requirements.

Design the Agent Architecture:

Determine components like perception modules, decision-making units, and action executors.

Integrate with Large Language Models (LLMs):

Utilize models like GPT-4 for natural language understanding and generation.

Implement Memory and Learning Mechanisms:

Incorporate memory to retain context and learning algorithms to improve over time.

Test and Iterate:

Continuously evaluate the agent’s performance and refine its behavior

from langchain.agents import initialize_agent, Tool

from langchain.llms import OpenAI

# Define a simple tool

def greet(name):

return f"Hello, {name}!"

tools = [

Tool(

name="Greeter",

func=greet,

description="Greets the user by name."

)

]

# Initialize the agent

llm = OpenAI(temperature=0)

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

# Run the agent

agent.run("Greet John")

This code sets up a basic agent using LangChain that greets a user by name.

System Prompt:

Sets the behavior or persona of the AI.

Example: “You are a helpful assistant.”

User Prompt:

Represents the user’s input or query.

Example: “What’s the weather like today?”

In Agentic AI, the system prompt guides the agent’s responses, ensuring consistency and alignment with desired behaviors.

Tool Use:

Refers to the AI agent’s ability to utilize external tools or APIs to perform tasks.

Example: Using a calculator API to compute mathematical expressions.

Function Calling:

Involves invoking specific functions within the code to achieve a result.

Example: Calling a translate() function to convert text between languages.

Here is the code snippet you can refer to:

def translate(text, target_language):

# Placeholder for translation logic

return f"Translated '{text}' to {target_language}"

# Agent uses the translate function

result = translate("Hello", "Spanish")

print(result) # Output: Translated 'Hello' to Spanish

A planning module enables an AI agent to:

Set Goals: Define what it aims to achieve.

Develop Strategies: Outline steps to reach the goals.

Adapt Plans: Modify strategies based on new information.

This module is crucial for tasks requiring multi-step reasoning and adaptability.

Example:

In a delivery robot:

Goal: Deliver a package to a specific address.

Strategy: Navigate through the optimal route.

Adaptation: Reroute if an obstacle is detected.

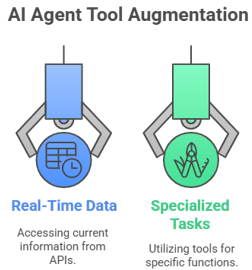

Tool augmentation involves enhancing an AI agent’s capabilities by integrating external tools, allowing it to:

Access Real-Time Data: Fetch current information from APIs.

Perform Specialized Tasks: Utilize tools for specific functions like image recognition.

Example:

An AI writing assistant augmented with grammar-checking tools can provide more accurate suggestions.

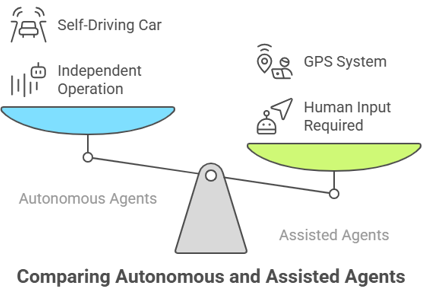

Autonomous Agents:

Operate independently without human intervention.

Example: A self-driving car navigating traffic.

Assisted Agents:

Require human input or oversight to function.

Example: A GPS system providing directions based on user input.

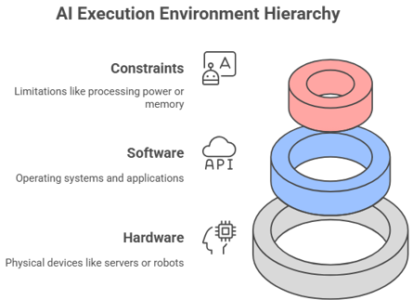

An execution environment is the platform where an AI agent operates, encompassing:

Hardware: Physical devices like servers or robots.

Software: Operating systems and applications.

Constraints: Limitations like processing power or memory.

Example:

A virtual assistant running on a smartphone operates within the device’s execution environment.

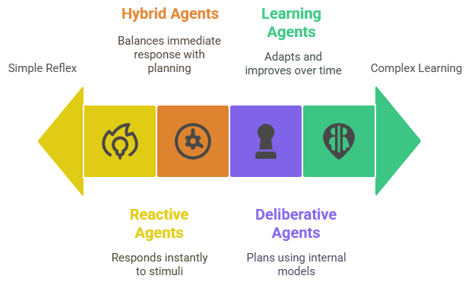

Reactive Agents:

Respond to stimuli without internal memory.

Example: Thermostats adjusting temperature.

Deliberative Agents:

Use internal models to make decisions.

Example: Chess-playing programs evaluating future moves.

Hybrid Agents:

Combine reactive and deliberative approaches.

Example: Autonomous drones navigating environments.

Learning Agents:

Improve performance over time through learning.

Example: Recommendation systems adapting to user preferences.

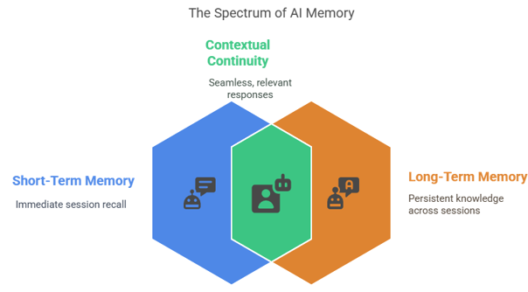

Memory in AI agents enables them to retain information across interactions, enhancing their ability to provide contextually relevant responses. There are two primary types:

Short-Term Memory: Retains recent interactions within a session.

Long-Term Memory: Stores information across sessions for future reference.

Implementation Example:

Using LangChain’s ConversationBufferMemory:

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory()

memory.save_context({"input": "Hi"}, {"output": "Hello!"})

print(memory.load_memory_variables({}))

Reflection allows AI agents to evaluate their actions and outcomes, leading to improved future performance. This self-assessment can involve analyzing successes and failures to refine strategies.

Example:

An AI writing assistant reviewing its previous suggestions to enhance future recommendations.

Memory stores enable agents to:

Maintain context over extended interactions.

Personalize responses based on user history.

Learn from past experiences to avoid repeating mistakes.

Example:

A customer service chatbot recalling a user’s previous issues to provide tailored support.

Optimizing memory ensures that agents:

Retrieve relevant information efficiently.

Avoid information overload.

Balance between retaining useful data and discarding irrelevant details.

Techniques:

Implementing vector databases for semantic search.

Using summarization to condense past interactions.

A context window refers to the amount of information an AI model can process at once. For instance, GPT-4 has a context window of up to 32,768 tokens, allowing it to consider extensive prior interactions when generating responses.

LLM observability involves monitoring and analyzing the behavior of large language models to ensure reliability and performance. It includes tracking metrics like response times, error rates, and usage patterns.

Tools:

OpenTelemetry for standardized metrics.

Langfuse for tracing and debugging LLM workflows.

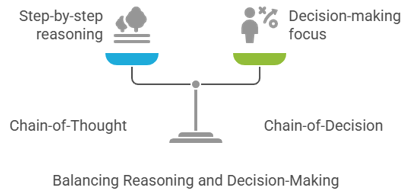

CoT reasoning involves the AI model articulating intermediate steps when solving a problem, leading to more accurate and interpretable outcomes.

Here is the code snippet you can refer to:

from langchain import PromptTemplate

template = PromptTemplate.from_template(

"Question: {question}nLet's think step by step."

)

This prompt encourages the model to break down its reasoning process.

Chain-of-Thought (CoT): Encourages step-by-step reasoning.

Chain-of-Decision (CoD): Focuses on decision-making processes, outlining choices and consequences.

Use Case:

CoT is suited for problem-solving tasks, while CoD is ideal for scenarios requiring decision analysis.

Tracing involves monitoring the flow of data through an LLM pipeline, identifying each operation’s performance and outcomes.

Spans: Represent individual operations within a trace, capturing start and end times, inputs, and outputs.

Tool:

OpenTelemetry provides a framework for implementing tracing in AI systems.

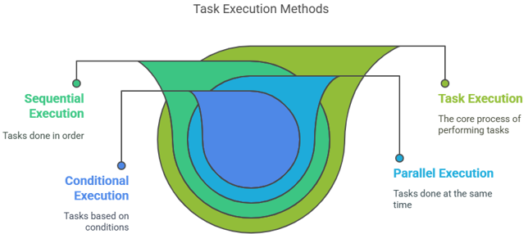

Sequential Execution: Tasks are performed one after another.

Parallel Execution: Multiple tasks are executed simultaneously.

Conditional Execution: Tasks are performed based on specific conditions.

Example:

An AI agent processing multiple user requests in parallel to improve efficiency.

Agents assess task dependencies and resource availability to determine the optimal execution strategy. Parallel execution increases speed but may require more resources, while sequential execution is resource-efficient but slower.

RAG combines information retrieval with text generation, enabling AI models to access external data sources to produce more accurate and contextually relevant responses.

Example:

An AI assistant retrieving the latest news articles to answer user queries.

Multimodality refers to AI systems’ ability to process and integrate multiple data types, such as text, images, and audio, enhancing their understanding and interaction capabilities.

Example:

Google’s Project Astra demonstrates multimodal capabilities by interpreting visual and textual inputs.

Agents access databases, APIs, or documents to retrieve information, enriching their responses and decision-making processes.

Example:

A medical AI agent consulting a database of clinical guidelines to provide treatment recommendations.

Agentic AI systems can autonomously handle increasing workloads by:

Automating repetitive tasks.

Adapting to new challenges without manual intervention.

Integrating seamlessly with existing infrastructure.

Real-time augmentation allows agents to:

Access up-to-date information.

Adjust responses based on current context.

Enhance decision-making accuracy.

Example:

A financial AI agent analyzing live market data to provide investment advice.

By integrating generative models like DALL·E or Stable Diffusion, agents can create visual content based on textual prompts, aiding in creative tasks and data enhancement.

Example:

An AI design assistant generating website mockups based on user specifications.

Building Agentic AI is hard due to complexity, integration, and unpredictability.

Managing memory and context across long interactions.

Ensuring reliability and low latency in real-time.

Securing sensitive user data.

Handling unpredictable or hallucinated outputs.

Robust prompts reduce errors and improve consistency in agent responses.

Use structured, tested prompt templates.

Include fail-safes and fallback responses.

Test across varied inputs (prompt fuzzing).

Implement monitoring and version control

Debugging involves tracing, testing, and logging steps in the pipeline.

Use tools like LangSmith, LangFuse, or OpenTelemetry.

Log intermediate steps and spans.

Run unit tests for each module (retriever, planner, executor).

Visualize chains with trace viewers.

Evaluation blends metrics, feedback, and task success rates.

Accuracy (task correctness).

Latency (response time).

User satisfaction (CSAT/NPS).

Evaluation frameworks like RAGAS or OpenAI evals.

HITL adds human oversight to AI tasks for safety and quality.

Used in high-risk domains (medicine, law, finance).

Allows manual approval before final action.

Improves training via human feedback (RLHF).

Agents define when to ask humans based on confidence or context.

Confidence thresholds decide when to escalate.

Human reviews for critical decisions.

Gradual autonomy based on trust levels.

Stateful agents remember context to make better decisions over time.

Retain conversation or task history.

Reduce repetition, improve personalization.

Enable context-aware long-term workflows.

Agents automate tasks and reduce human overhead.

Fewer support staff needed for repetitive tasks.

Better resource utilization.

Faster response reduces downtime.

Agents offer fast, intelligent, and personalized support 24/7.

Handle common queries instantly.

Escalate complex issues to humans.

Learn from past conversations.

Yes, agents adapt to new tasks or conditions without reprogramming.

Dynamic workflows based on context.

Self-correction via reflection.

Integration with APIs for real-time actions.

Agents tailor actions to user goals, constraints, and context.

Use profiles, history, and preferences.

Adapt strategies to different users or domains.

Leverage memory and learning.

Agents make NPCs smarter, content dynamic, and games immersive.

AI-powered storytelling and character dialogue.

Adaptive difficulty or missions.

Generative content (levels, textures).

IoT feeds real-time data into agents for smarter actions.

Agents monitor and control devices (e.g., smart homes).

Predictive maintenance in manufacturing.

Real-time decision-making from sensor input.

AI agents assist doctors, automate paperwork, and improve diagnoses.

Clinical decision support tools.

Triage and patient scheduling bots.

Personalized treatment planning.

Agents divide, delegate, and synchronize sub-tasks via messaging.

Planner assigns goals to specialist agents.

Agents share outputs (e.g., Retriever → Writer).

Feedback loops ensure coherence.

Design with monitoring, anomaly detection, and rapid countermeasures.

Ingest logs from endpoints and firewalls.

Use ML for intrusion detection.

Automate alerts or responses via playbooks.

It would analyze market trends, client goals, and risks to advise investments.

Retrieve real-time financial data.

Risk profiling using user history.

Personalized portfolio suggestions.

It would help in diagnosis, treatment planning, and patient coordination.

EHR integration for patient context.

Suggest tests, flag anomalies.

Coordinate appointments and medication.

LLMs help agents decide what to do and in what order.

Task decomposition from high-level goals.

Prioritize actions based on constraints.

Can create step-by-step execution plans.

Agentic AI will move toward autonomy, personalization, and cross-domain collaboration.

Multi-agent ecosystems for complex workflows.

Personalized AI assistants for all.

Regulatory and ethical maturity.

They’re open-source experimental agents that self-plan and execute tasks.

AutoGPT: Uses LLM + memory + tools to complete goals.

BabyAGI: A minimal task manager that recursively breaks goals.

Agents act, plan, and adapt—chatbots mostly respond to inputs.

| Feature | Chatbot | Agentic AI |

|---|---|---|

| Planning | No | Yes |

| Memory | Limited | Long-term, contextual |

| Autonomy | None | High |

| Tool usage | Rare | Integrated (APIs, DBs, etc.) |

Despite progress, agents still lack robustness, generalization, and ethics.

Prone to hallucination or failure in novel tasks.

High computational cost.

Limited true understanding or reasoning.

Want to learn more about Agentic AI? Explore our online course on Agentic AI.

To prepare for an AI interview, follow a structured approach targeting both technical depth and problem-solving ability:

Understand key concepts: machine learning, neural networks, supervised vs unsupervised learning, overfitting, regularization.

Brush up on linear algebra, calculus, probability, and statistics.

Solve problems on platforms like LeetCode, HackerRank, and Kaggle.

Be comfortable with Python libraries like NumPy, Pandas, Scikit-learn, PyTorch, or TensorFlow.

Know how CNNs, RNNs, Transformers, and GANs work.

Be ready to discuss use cases and trade-offs.

Understand data pipelines, model deployment, MLOps, and scalability.

Be ready to design AI systems (e.g., recommendation engines, fraud detection).

Be able to explain your previous work: problem statement, approach, evaluation metrics, and outcomes.

Practice explaining complex ideas simply.

Prepare STAR-format answers (Situation, Task, Action, Result) for soft skill questions.

Pro Tip:

Keep up with the latest research papers and industry trends via ArXiv, Papers with Code, and newsletters like “The Batch” by Andrew Ng.

The four main rules (or types of performance environments) that guide the behavior of AI agents are:

Autonomy

Agents must operate independently with minimal human input.

Perception

Agents should sense and interpret their environment through sensors or input data.

Rationality

Agents should always act to maximize their expected performance measure.

Learning

Agents should improve their performance over time based on experience and data.

These rules ensure that an AI agent behaves effectively, adapts to new conditions, and strives to achieve its goals.

AI agents can be classified into five types based on their capabilities and complexity:

Act based on current percept, ignoring the history.

Rule-based, fast but not adaptable.

Example:

A vacuum cleaner that turns right when it hits a wall.

[/fancyquote]

2. Model-Based Reflex Agents

Maintain an internal state to track aspects of the world not evident in the current percept.

More flexible than simple reflex agents.

Example:

A robot that maps its surroundings to decide the next move.

[/fancyquote]

3. Goal-Based Agents

Use goals to guide decision-making.

Choose actions based on whether they move the agent closer to its goals.

Example:

A navigation app finding the best route to a destination.

[/fancyquote]

4. Utility-Based Agents

Use a utility function to measure preference among different outcomes.

Select the action that offers the highest utility.

Example:

A self-driving car that considers safety, speed, and passenger comfort simultaneously.

[/fancyquote]

5. Learning Agents

Continuously improve by learning from the environment and their own performance.

Includes a learning element, a performance element, critic, and problem generator.

Example:

AlphaGo, which learned to play Go at a superhuman level through self-play.

[/fancyquote][/fancyquote]

[/fancyquote]

[/fancyquote]

[/fancyquote]

[/fancyquote][/fancyquote]

[/fancyquote]

[/fancyquote]

[/fancyquote]

[/fancyquote][/fancyquote]

[/fancyquote]

[/fancyquote]

[/fancyquote]

[/fancyquote]

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUPedureka.co