Azure Data Engineer Certification (DP-203) Co ...

- 9k Enrolled Learners

- Weekend

- Live Class

Apache HBase is an open-source, distributed, non-relational database modeled after Google’s Bigtable and written in Java. It provides capabilities similar to Bigtable on top of Hadoop and HDFS (Hadoop Distributed Filesystem) i.e. it provides a fault-tolerant way of storing large quantities of sparse data, which are common in many big data use cases. HBase is used for real time read/write access to Big Data.

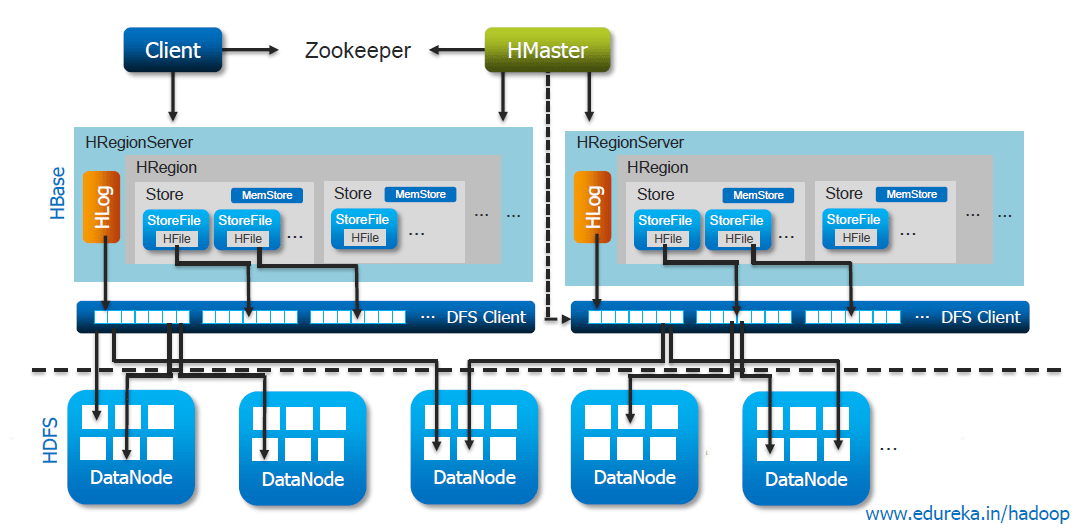

The HBase Storage architecture comprises numerous components. Let’s look at the functions of these components and know how data is being written.

HFiles:

HFiles forms the low level of HBase’s architecture. HFiles are storage files created to store HBase’s data fast and efficiently.

HMaster:

The HMaster is responsible to assign the regions to each HRegionServer when HBase is started. It is responsible for managing everything related to rows, tables and their co-ordination activities. The Hmaster also has the details of the metadata.

Learn more about Big Data and its applications from the Data Engineering courses.

HBase has the following components:

The HBase stores data directly in to the HDFS and relies greatly on HDFS’s High Availability and Fault Tolerance.

The general flow is that a Client contacts the Zookeeper first to find a particular row key. It does so by retrieving the server name from Zookeeper. With this information it can now query that server to get the server that holds the metatable. Both these details are cached and only looked up once. Lastly, it can query the metaserver and retrieve the server that has the row the client is looking for. You can even check out the details of Big Data with the Azure Data Engineering Certification in Atlanta.

Once it knows in what region the row resides, it caches this information as well and contacts the HRegionServer directly. So over time the Client has complete information of where to get rows from without needing to query the metaserver again. When the HRegion is opened, it sets up a Store instance for each HColumnFamily for every table. Data is written when the Client issues a request to the HRegionServer which provides the details to the matching HRegion instance. The first step is that we have to decide if the data should be first written to the ‘Write-Ahead-Log’ (WAL) represented by the HLog class. The decision is based on the flag set by the client.

Once the data is written to the WAL it is placed in the MemStore. At the same time, the Memstore is checked whether it is full and in that case a flush to disk is requested. Then the data is written in to the HFile.

Got a question for us? Mention them in the comments section and we will get back to you.

Related Posts

REGISTER FOR FREE WEBINAR

REGISTER FOR FREE WEBINAR  Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

Thank you for registering Join Edureka Meetup community for 100+ Free Webinars each month JOIN MEETUP GROUP

edureka.co